In some cases, you might see that the Buying Journey or the Conversions report show a total amount of visitors or conversions, and when you click on Performance review, those numbers suddenly change. The exposed vs. non-exposed segments don’t add up to the original totals, which can look confusing at first.

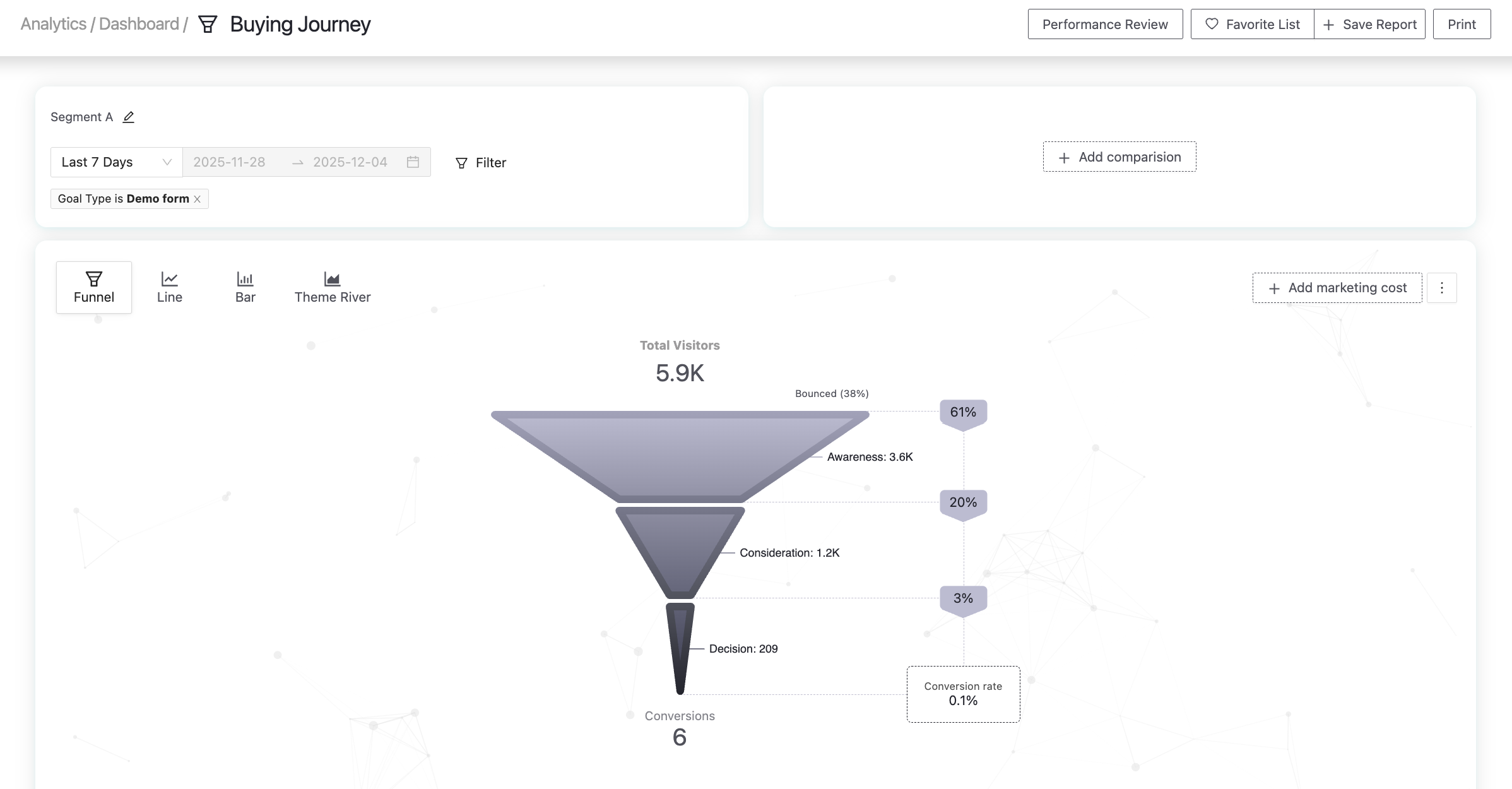

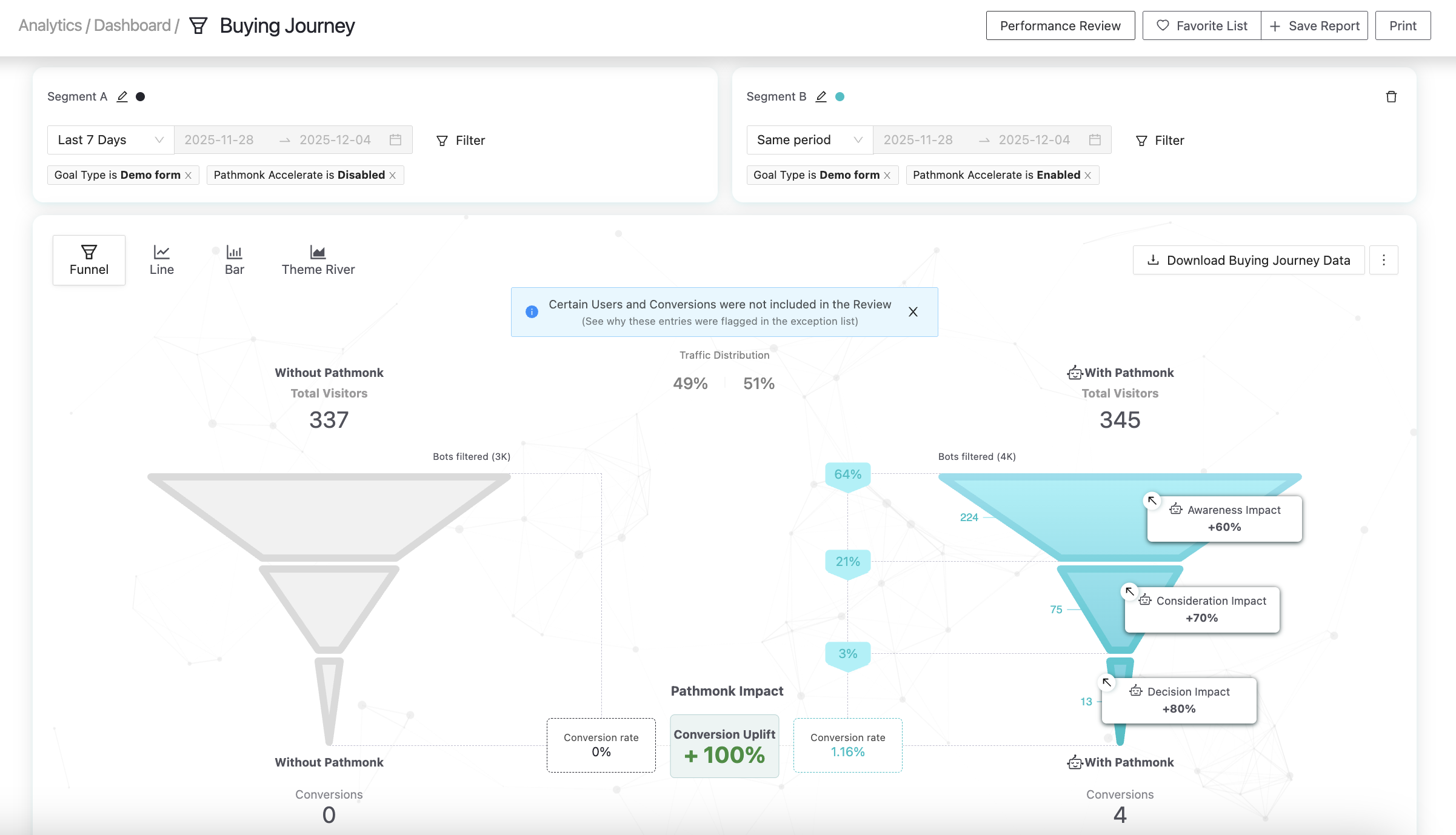

Take the following images as an example:

Before hitting on "Performance Review" we see a total of 5.9 K visitors and 6 conversions.

While after clicking on "Performance Review" the sum of visitors equals 682 and 4 conversions.

This happens because some visitors simply can’t be assigned to either segment. Pathmonk can’t classify them as “exposed” or “non-exposed,” so they’re not included in the A/B test breakdown, even though they still appear in your overall totals.

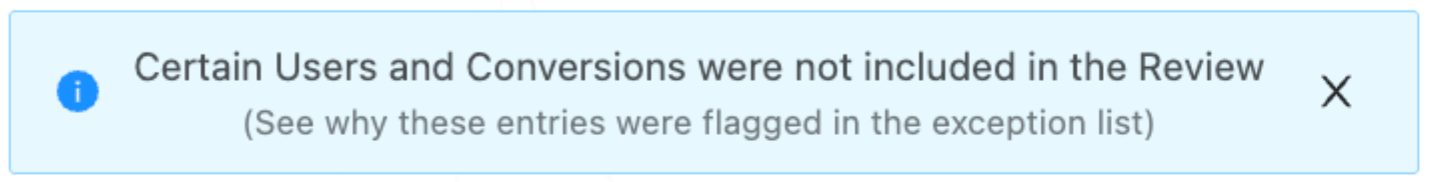

When this occurs, you’ll see a small notification at the top of the Performance review explaining that some visitors aren’t included in the comparison.

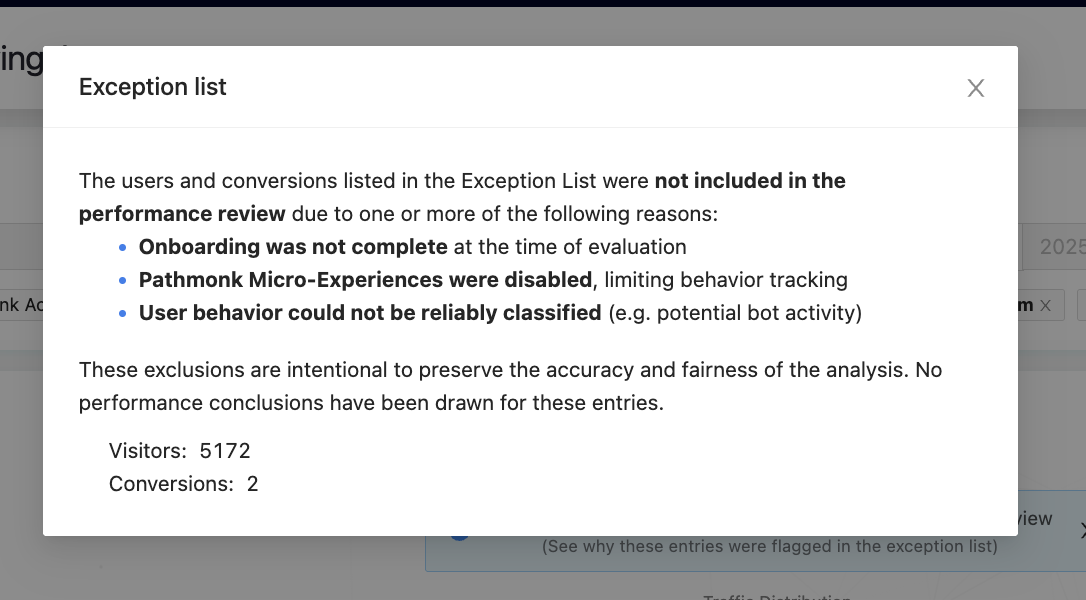

If you click on that message, you’ll see the remaining visitors and conversions, as well as a list of possible reasons why these metrics are out of the A/B testing.

1. Onboarding was not complete at the time of evaluation

This scenario appears when Pathmonk has already started tracking activity on your website — because the snippet is installed — but your microexperiences are not live yet. Until you go live, the A/B test cannot begin.

Any visitors who arrive during this interim period fall into a “pre-A/B test” bucket. Pathmonk can track these visits and conversions, but it cannot classify them as exposed or non-exposed because the experiment hasn’t officially started.

As a result:

These visitors do count toward your total traffic

Their conversions also appear in your overall metrics

But they cannot be included in the A/B breakdown inside the Performance review

They will always remain in their own separate group so the A/B data stays clean and reliable.

This only happens at the very beginning of onboarding and usually affects a small number of visitors, essentially those who land on the site between the moment the snippet is installed and the moment microexperiences are activated. Learn more about what is a Pathmonk A/B test.

2. Microexperiences were disabled

If microexperiences are turned off for any period Pathmonk loses the ability to evaluate whether a visitor could have been exposed or not. Segmentation depends on knowing whether the experience was available at the time of the visit.

So during any period where microexperiences are disabled Pathmonk can still track visits and conversions but it cannot reliably classify those users into “exposed” or “non-exposed”. As a result, those sessions are excluded from the A/B breakdown.

This can happen if someone toggles off Pathmonk from the Display settings, or if the site temporarily disables scripts during maintenance.

3. User behavior could not be reliably classified (e.g., potential bot activity)

Pathmonk automatically filters out traffic that doesn’t look like real human behavior. Examples include:

Extremely fast page interactions

Repeated identical patterns

Suspicious or inconsistent user-agent data

Known bot activity

This exclusion protects the integrity of your experiment by ensuring that only real user behavior influences your conversion uplift.

Was this article helpful?

That’s Great!

Thank you for your feedback

Sorry! We couldn't be helpful

Thank you for your feedback

Feedback sent

We appreciate your effort and will try to fix the article