The Bot Traffic report helps you understand how much of your website traffic comes from non-human sources. Pathmonk automatically detects and tracks bot visits so you can distinguish between genuine user interactions and automated activity, ensuring that your analytics remain accurate and trustworthy.

How Pathmonk detects bot traffic

Pathmonk identifies bot traffic by analyzing unusual or non-human behavioral patterns. The system detects activity that doesn’t follow typical user navigation, for example:

Extremely high page request rates in short timeframes.

Visits with no scrolling, no clicks, or zero interaction signals.

Requests coming from known crawler networks or data centers.

Repeated visits from identical user agents or IPs associated with bots.

Pathmonk continuously updates its detection model to include known crawlers (like Googlebot or BingBot) and suspicious automated behaviors

Important: Pathmonk tracks bot traffic for visibility and transparency, but bot traffic is never counted toward your pageview-based subscription. Your billing only reflects genuine, human interactions.

Bot traffic evolution

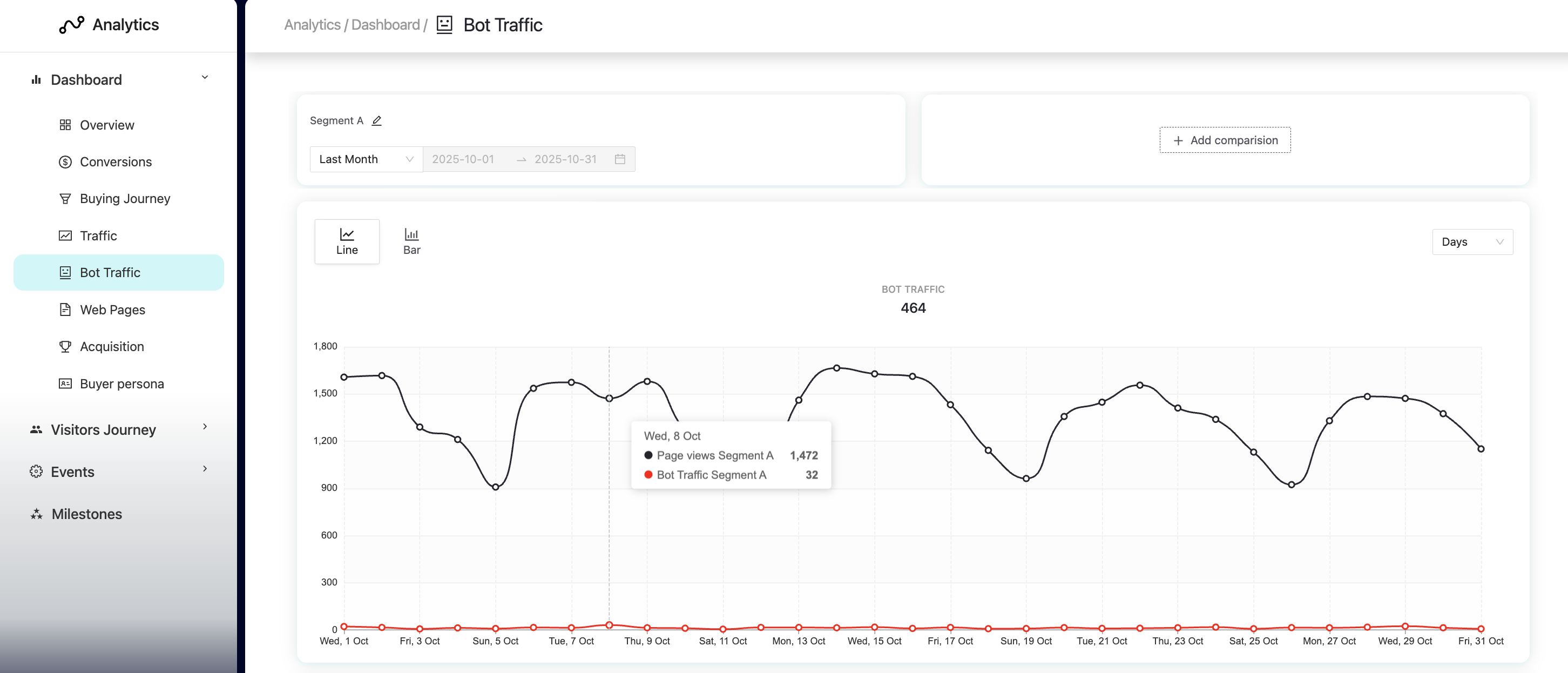

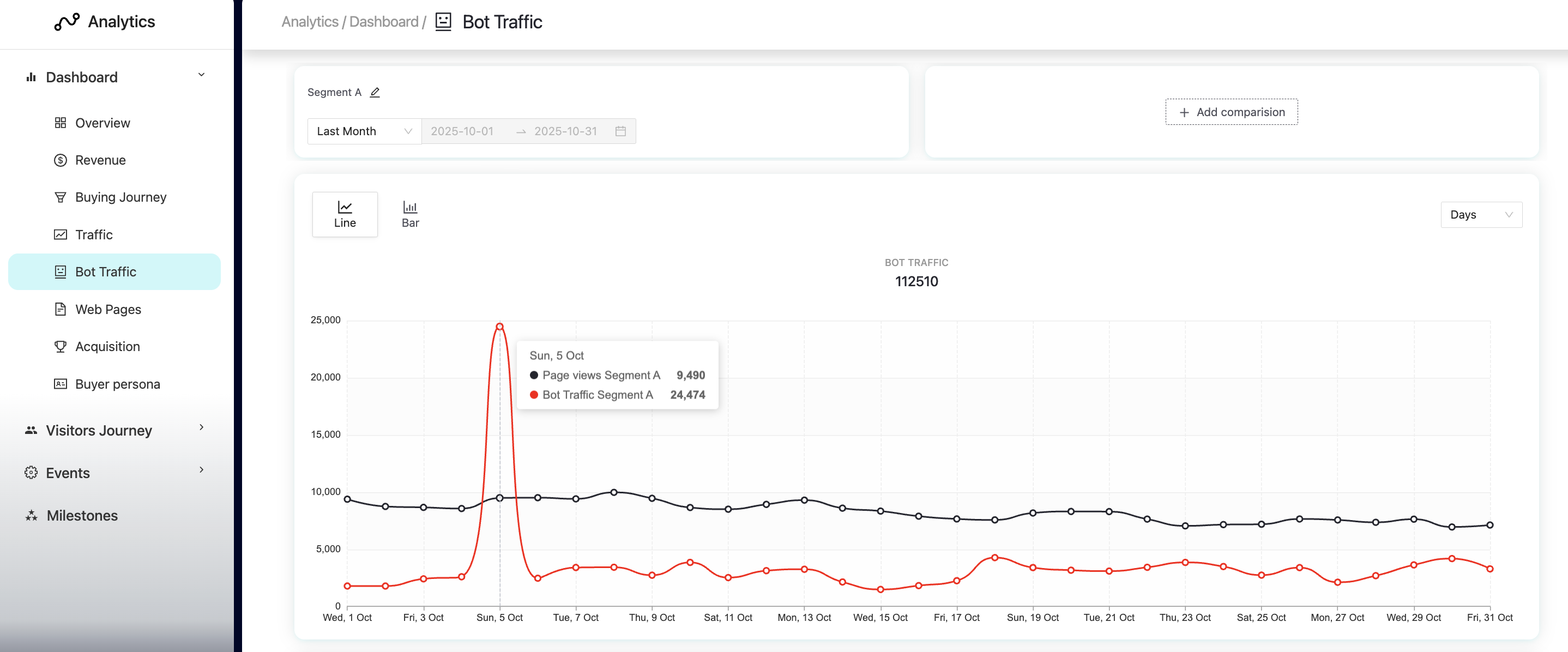

When you open the Bot Traffic report, you’ll see a line or bar chart that visualizes how bot activity evolves over time.

The black line represents total page views for the selected segment.

The red line represents bot traffic detected during the same period.

You can use the date range selector to analyze any period — daily, weekly, or monthly — and identify unusual peaks.

In a typical case, like the example above, you’ll see a low and stable red line, showing minimal bot activity.

However, if there’s a sudden spike, as the customer below experienced recently, it indicates a bot attack or unusual crawl event. These peaks help you identify when bots are inflating your raw traffic metrics so you can take preventive action or monitor its impact.

Pathmonk shows the total number of detected bots at the top of the chart, alongside pageviews, so you can compare how much of your total traffic is automated versus real.

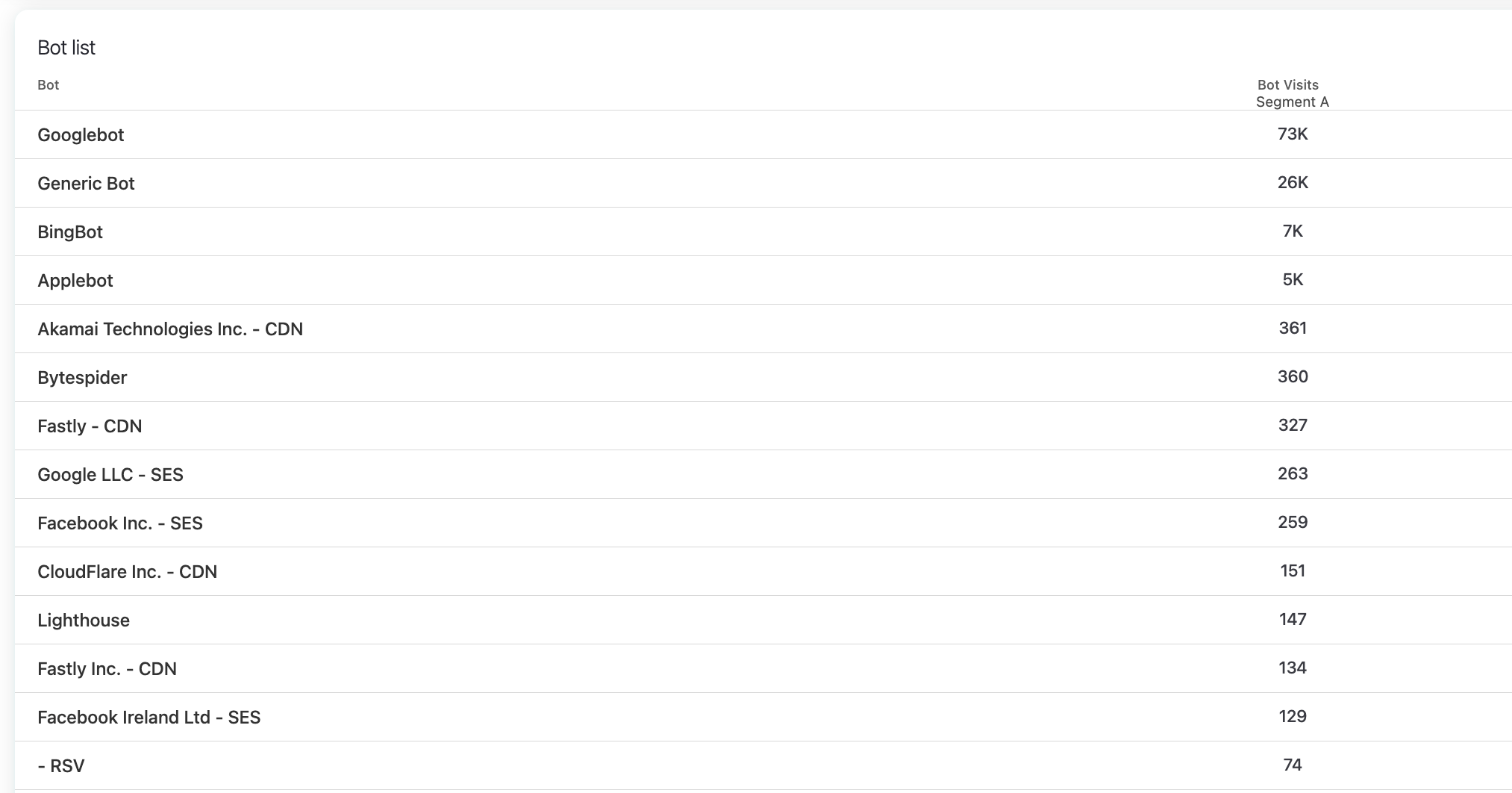

Bot source list

Below the evolution chart, you’ll find the Bot list, a detailed breakdown of where the bot traffic came from.

Each row includes:

The bot name or provider (e.g., Googlebot, BingBot, Applebot).

The number of visits detected for that bot during the selected period.

You can use this section to better understand which automated sources are interacting with your website and take action if you see any unexpected patterns.

Understanding your bot sources

Not all bot traffic is malicious, but understanding where it comes from helps you make smarter marketing and media-buying decisions.

For instance, if you’re running paid campaigns, you’ll likely see bot sources such as Google, Facebook, or LinkedIn in this list. These platforms often send automated verification or click-check bots that can inflate your ad metrics without generating any real engagement.

Here’s how to interpret common patterns and take action:

Googlebot, BingBot, Applebot: Legitimate crawlers indexing your website. These are normal and safe, no action needed.

Ad platforms (e.g., Google Ads, Facebook, LinkedIn): Seeing high bot activity from these sources can indicate ad impressions or clicks generated by automated systems. This often happens when Google Display Partners or broad audience networks are enabled.

→ Tip: Narrow your targeting or disable partner networks to reduce irrelevant traffic.CDNs and Cloud Services (e.g., Akamai, Cloudflare, Fastly): These bots cache your site content for faster delivery. They don’t harm performance but can temporarily spike traffic during configuration changes.

Unknown or generic bots: High activity from “Generic Bot,” “Bytespider,” or “RSV” often points to scraping or non-indexing bots. If frequent, review your robots.txt or firewall rules to block suspicious user agents.

Regional bots (e.g., Baidu Spider): If your business doesn’t target those markets, you can safely exclude these crawlers using location-based or IP restrictions.

Our recommendation: Use sudden increases in bot traffic as a signal to review your ad setup and audit your traffic quality. It’s often the fastest way to detect when paid campaigns are wasting spend on non-human clicks.

Was this article helpful?

That’s Great!

Thank you for your feedback

Sorry! We couldn't be helpful

Thank you for your feedback

Feedback sent

We appreciate your effort and will try to fix the article